tez-0.8.4在hadoop-2.7.4上安装

tez-0.8.4在hadoop-2.7.4上安装

# 参考文档

apache tez on hadoop-2.7.1 (opens new window)

# 获取软件地址

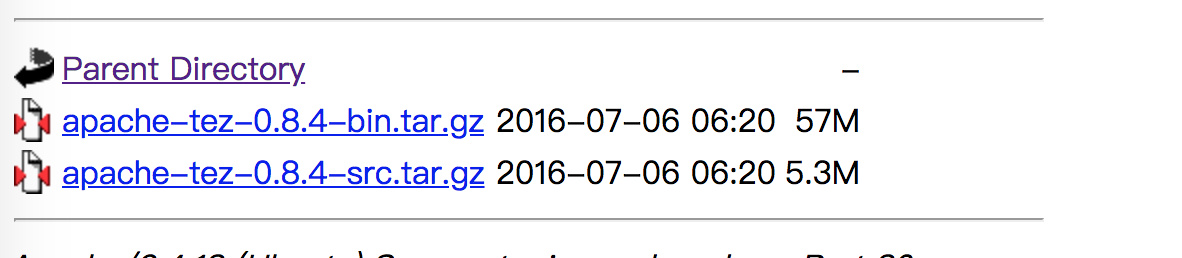

wget http://mirrors.shu.edu.cn/apache/tez/0.8.4/apache-tez-0.8.4-bin.tar.gz

通过浏览器打开以上链接后

其中bin是编译后的tar包,src需要自行编译。这里直接下载编译后的即可。

# 解压tar.gz包

tar -zxvf apache-tez-0.8.4-bin.tar.gz

然后将解压后的apache-tez-0.8.4-bin目录拷贝到/usr/local/hadoop/目录下,这个目录你自己定

cp -r apache-tez-0.8.4-bin /usr/local/hadoop/

# 上传tez.tar.gz包到hdfs上

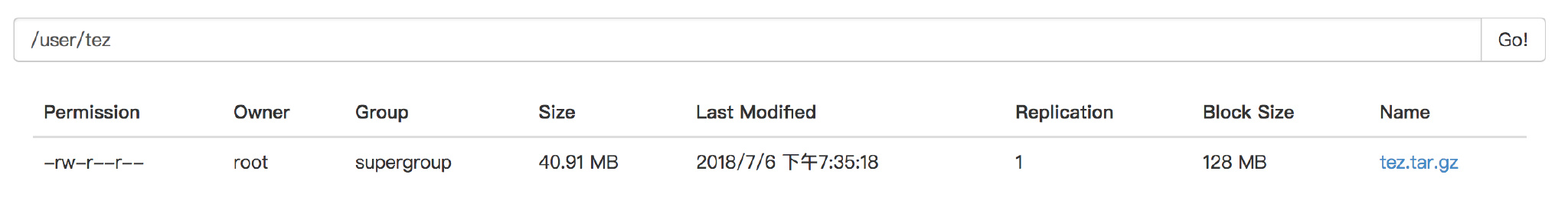

将apache-tez-0.8.4-bin/share/tez.tar.gz文件上传到hdfs的某个目录上

hadoop fs -mkdir /user/tez

hadoop fs -put tez.tar.gz /user/tez

2

上传成功后的目录如下:

# 配置tez-site.xml

拷贝${TEZ_HOME}/conf/tez-default-template.xml到hadoop的etc/hadoop目录下,并重命名为tez-site.xml。

[root@mini1 conf]# cp tez-default-template.xml /usr/local/hadoop/hadoop-2.7.4/etc/hadoop/

[root@mini1 conf]# cd /usr/local/hadoop/hadoop-2.7.4/etc/hadoop/

[root@mini1 conf]# mv tez-default-template.xml tez-site.xml

2

3

编辑${HADOOP_HOME}/etc/hadoop/tez-site.xml文件,修改tez.lib.uris(700行)为你上传到hdfs的路径

<property>

<name>tez.lib.uris</name>

<type>string</type>

<value>${fs.default.name}/user/tez/tez.tar.gz</value>

</property>

2

3

4

5

# 配置环境变量

编辑${HADOOP_HOME}/etc/hadoop/hadoop-env.sh文件,增加如下配置。注意路径的问题

export TEZ_CONF_DIR=/usr/local/hadoop/hadoop-2.7.4/etc/hadoop/tez-site.xml

export TEZ_JARS=/usr/local/hadoop/tez-0.8.4/

export HADOOP_CLASSPATH=${HADOOP_CLASSPATH}:${TEZ_CONF_DIR}:${TEZ_JARS}/*:${TEZ_JARS}/lib/*

2

3

# 拷贝文件到其他从节点

我这里mini1是主节点,mini2和mini3是从节点

需要拷贝的如下:

/usr/local/hadoop/tez-0.8.4目录下所有文件${HADOOP_HOME}/etc/hadoop/hadoop-env.sh${HADOOP_HOME}/etc/hadoop/tez-site.xml

#拷贝tez-0.8.4目录

[root@mini1 conf]# scp -r /usr/local/hadoop/tez-0.8.4/ root@mini2:/usr/local/hadoop/

[root@mini1 conf]# scp -r /usr/local/hadoop/tez-0.8.4/ root@mini3:/usr/local/hadoop/

#拷贝hadoop-env.sh

[root@mini1 conf]# scp hadoop-env.sh root@mini2:/usr/local/hadoop/hadoop-2.7.4/etc/hadoop/

[root@mini1 conf]# scp hadoop-env.sh root@mini3:/usr/local/hadoop/hadoop-2.7.4/etc/hadoop/

#拷贝tez-site.xml

[root@mini1 conf]# scp tez-site.xml root@mini2:/usr/local/hadoop/hadoop-2.7.4/etc/hadoop/

[root@mini1 conf]# scp tez-site.xml root@mini3:/usr/local/hadoop/hadoop-2.7.4/etc/hadoop/

2

3

4

5

6

7

8

9

10

11

# 重启hadoop集群

[root@mini1 ~]# stop-all.sh

[root@mini1 ~]# start-all.sh

2

# 测试demo

运行tez的examples-0.8.4.jar

[root@mini1 conf]# cd /usr/local/hadoop/tez-0.8.4

[root@mini1 conf]# hadoop jar tez-examples-0.8.4.jar orderedwordcount /input /output

2

# hive中测试

在hive中随便找张表测试

# 不使用tez的情况

hive> show tables;

OK

dim_is_dirty_reason

t_user

Time taken: 0.942 seconds, Fetched: 2 row(s)

hive> desc t_user;

OK

city_code string 城市编码

area_code string 区代码

Time taken: 0.182 seconds, Fetched: 2 row(s)

hive> select max(city_code) from t_user;

Query ID = root_20180706222011_82d404af-01e7-4fba-9617-3ac0826b41f5

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1530884943794_0002, Tracking URL = http://mini1:18088/proxy/application_1530884943794_0002/

Kill Command = /usr/local/hadoop/hadoop-2.7.4/bin/hadoop job -kill job_1530884943794_0002

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1

2018-07-06 22:20:20,058 Stage-1 map = 0%, reduce = 0%

2018-07-06 22:20:24,318 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 0.71 sec

2018-07-06 22:20:25,361 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

2018-07-06 22:20:30,575 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.28 sec

MapReduce Total cumulative CPU time: 2 seconds 280 msec

Ended Job = job_1530884943794_0002

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Reduce: 1 Cumulative CPU: 2.28 sec HDFS Read: 11402 HDFS Write: 2 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 280 msec

OK

a

Time taken: 20.546 seconds, Fetched: 1 row(s)

hive>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

# 使用tez执行

首先使用set hive.execution.engine=tez;命令,设置该次mapreduce的任务执行使用tez执行引擎

hive>set hive.execution.engine=tez;

hive> select max(city_code) from t_user;

Query ID = root_20180706222140_2ce99175-b55e-4e91-89f0-7d0bbc2a3477

Total jobs = 1

Launching Job 1 out of 1

Status: Running (Executing on YARN cluster with App id application_1530884943794_0003)

--------------------------------------------------------------------------------

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

Map 1 .......... SUCCEEDED 2 2 0 0 0 0

Reducer 2 ...... SUCCEEDED 1 1 0 0 0 0

--------------------------------------------------------------------------------

VERTICES: 02/02 [==========================>>] 100% ELAPSED TIME: 13.27 s

--------------------------------------------------------------------------------

OK

a

Time taken: 21.072 seconds, Fetched: 1 row(s)

hive>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

效果如下:

# 总结

以上只是设置临时使用tez执行,如果需要使所有mapreduce任务都使用tez执行,需要将mapre-site.xml中的mapreduce.framework.name的格式从yarn改为yarn-tez

<property>

<name>mapreduce.framework.name</name>

<value>yarn-tez</value>

</property>

2

3

4

- 环境变量需要配置在

hadoop-env.sh文件中 tez-site.xml中配置tez.lib.uris为你上传到hdfs的tez.tar.gz的路径- 将配置信息拷贝到其他从节点

- 重启hadoop集群

- hive命令行中使用

set hive.execution.engine=tez;